Featured

Model Averaging Machine Learning

Model Averaging Machine Learning. Surprisingly, the same observation can be made in the field of machine learning. Yet, in the context of limited data many questions remain unanswered.

To do model averaging, we first estimate 2^5 = 32 regressions. One such approach is weighted averaging between a locally trained model and the global model. This step is analogous to the quality assurance aspect of application development.

Here, We Are First Building All The.

The important difference is the standard deviation shrinking from 1.4% for a single model to 0.6% with an ensemble of five models. A modeling averaging ensemble combines the prediction from each model equally and often results in better performance on average than a given single model. Third, i compare model averaging to machine learning, which is becoming more widely used in economics.

Xing Ep Ho Q Dai W Kim Jk Wei J Lee S Zheng X Xie P Kumar A Yu Y Petuum:

Evaluate the model’s performance and set up benchmarks. If successful, you create your final model by averaging the results of the models part of the successful ensemble. We might expect that a given ensemble of five models on this problem to have a performance fall between about 74% and about 78% with a likelihood of 99%.

The Weight Increases With Data Fit, But Decreases With Model Complexity (Given The Same Fit, A Regression With 4 Variables Will Get More Weight Than A Regression With 5.

A case study with apache spark | the increasing popularity of apache spark has attracted many users to put their data into its ecosystem. Model averaging will only help if the models in the ensemble make different predictions / recommendations; Consult the machine learning model types mentioned above for your options.

This Step Involves Choosing A Model Technique, Model Training, Selecting Algorithms, And Model Optimization.

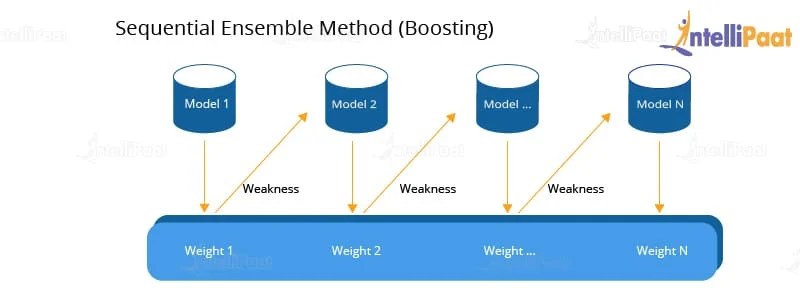

Then we assign each model a weight and compute a weighted average over the 32 regressions. Traditional machine learning methods can reduce overfitting by employing bagging or boosting to train several diverse models. This work is licensed under a creative commons attribution 4.0 international license.

Frequently An Ensemble Of Models Performs Better Than Any Individual Model, Because The Various Errors Of The Models Average Out.

In machine learning, particularly in the creation of artificial neural networks, ensemble averaging is the process of creating multiple models and combining them to produce a desired output, as opposed to creating just one model. Linear regression or gradient boosted trees and allow them to form an opinion towards the final prediction. When a list of candidate models is considered, model selection (ms) procedures guide us in search for the best model.

Popular Posts

What Is The Average Screen Time In Australia

- Get link

- X

- Other Apps

Comments

Post a Comment